Framework-Based Approach to AI Risk Assessment

AI risk assessment is no longer theoretical or purely compliance driven. It has become a systems discipline that directly affects how AI initiatives scale, fail, or survive regulatory and operational scrutiny. Organizations using AI in important areas like regulations, revenue, or safety must not depend on casual checks or separate controls, as their use can lead to accumulating hidden risks.

This blog post is written for decision makers, AI leaders, and senior engineers responsible for approving, designing, or governing production of AI systems. It focuses on framework-based approaches that turn abstract AI risk into structured, defensible decisions that can be applied across the AI lifecycle.

What Is an AI Risk Assessment Framework?

An AI risk assessment framework is a structured decision system that defines how AI risk is identified, evaluated, owned, and managed across the AI lifecycle.

At its core, a framework establishes structure and accountability. It clarifies who is responsible for risk decisions, how trade-offs are evaluated, and when escalation or intervention is required.

TRiSM provides the technical enforcement layer that turns this governance into practice. It operationalizes framework intent through runtime controls, continuous validation, monitoring, and reporting, applied consistently across AI stacks and business units.

A framework comprises the following:

- Governance structure: Defined roles, decision authority, and escalation paths

- Risk taxonomy and classification: Standardized risk categories, levels, and rating criteria

- Assessment methodology: Defined steps, tools, templates, and measurement standards

- Acceptance criteria and decision thresholds: Risk tolerance, approval rules, and trade off boundaries

- Monitoring and continuous assessment plan: Metrics, review frequency, alert thresholds, and reassessment triggers

- Documentation and audit trail: Assessment records, decisions, change history, and incident logs

Why Structured AI Risk Assessment Frameworks Matter

AI systems behave differently by design. The same input can generate different outputs depending on model state, randomness, and context. Behavior is learned from data rather than explicitly programmed, which produces emergent outcomes that cannot be exhaustively predicted or debugged in the traditional sense.

This shift fundamentally changes what risk assessment must be accounted for. The classic confidentiality, integrity, and availability model does not fully capture AI risk.

Structured frameworks impose discipline where AI systems introduce ambiguity. They force explicit ownership for model intent, data provenance, system behavior, and failure response.

These frameworks, however, are a part of the holistic AI risk assessment process.

Explore AI risk assessment process in detail: Everything You Need to Know About AI Risk Assessment

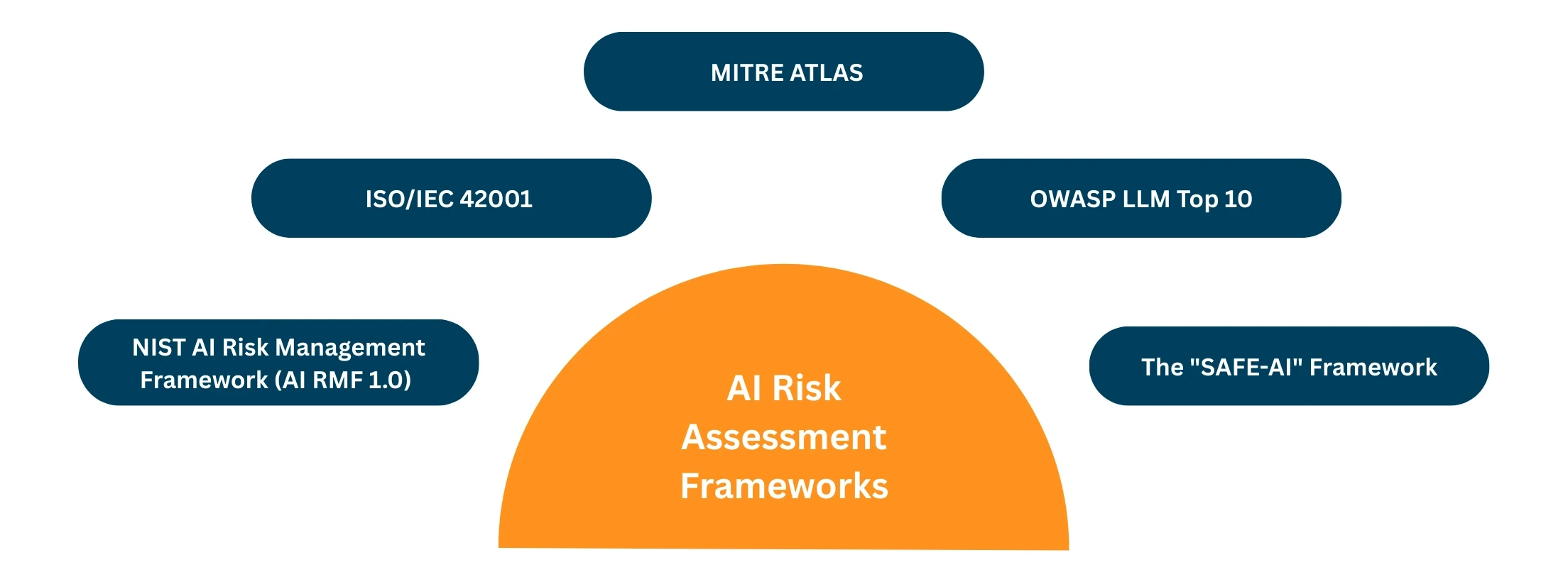

Key AI Risk Assessment Frameworks

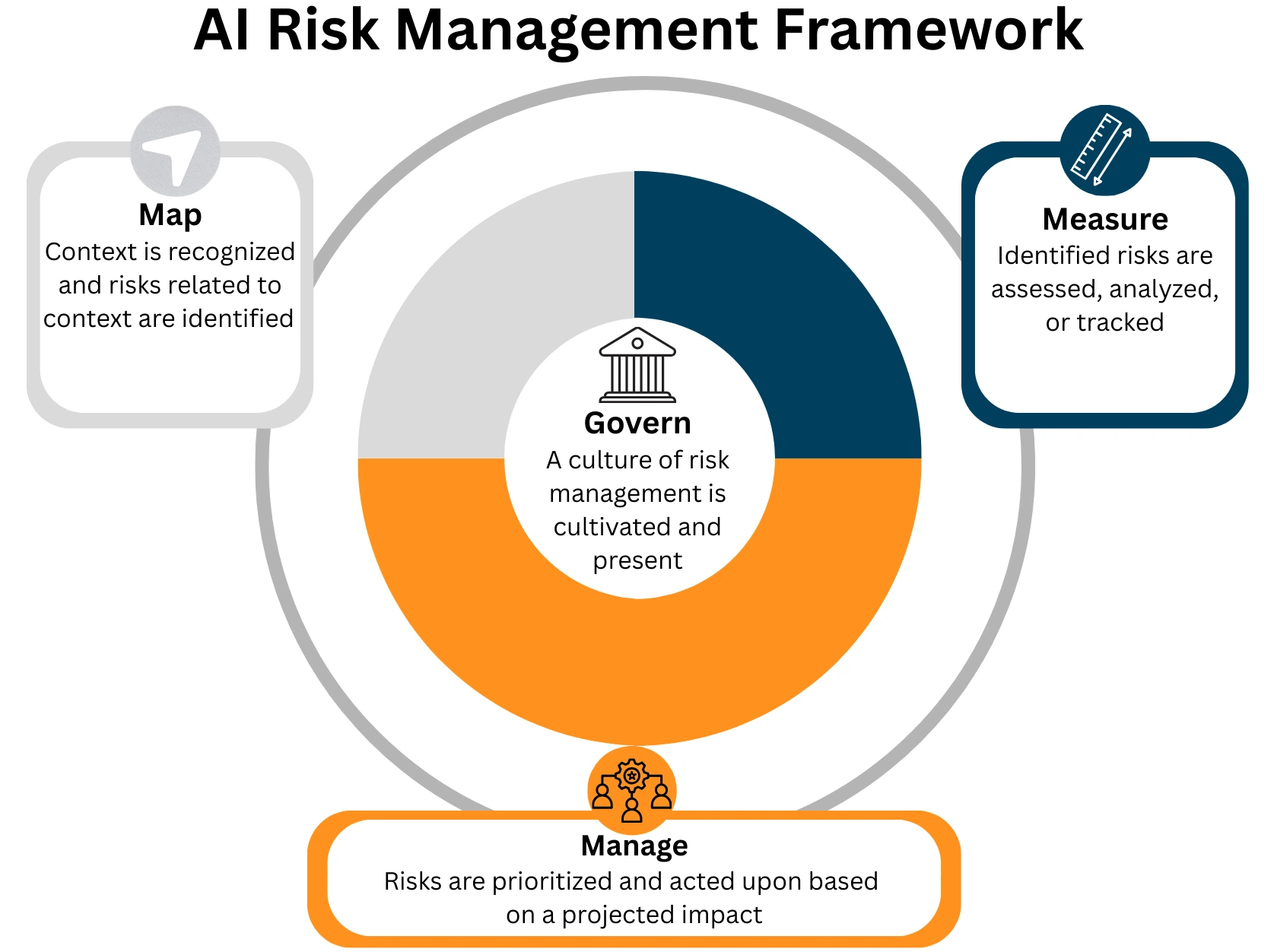

NIST AI Risk Management Framework

The NIST AI Risk Management Framework is the most comprehensive and pragmatic foundation available today for managing AI risk at scale. Its strength is not in prescribing controls, but in structuring how organizations think, decide, and govern AI risk across the entire lifecycle.

The NIST AI RMF is the dominant framework in the US, emphasizing a lifecycle approach.

- Govern: Assigns ownership, approval authority, and escalation paths for AI systems in production.

- Map: Defines system intent, affected users, data sources, assumptions, autonomy level, and usage boundaries.

- Measure: Evaluates bias, reliability, robustness, security, and drift through continuous evaluations spanning model quality, security abuse cases, and policy compliance, with results logged as auditable evidence.

- Manage: Enforces risk controls through runtime enforcement layers, including AI gateways, guardrail and guardian patterns, incident response workflows, retraining triggers, and controlled system retirement.

Source: atlas.mitre.org

ISO/IEC 42001 (AI Management System)

ISO/IEC 42001 complements AI risk assessment frameworks by standardizing how AI risk is governed, audited, and continuously improved across the organization. This standard formalizes how organizations govern AI across policy, roles, controls, audits, and continuous improvement.

ISO/IEC 42001 primarily defines an organizational management system. It does not, on its own, specify technical runtime controls or enforcement mechanisms for AI systems.

In practice, an AI management system helps organizations:

Define clear ownership and decision authority for AI use

Systematically identify and assess AI-related risks

Enforce transparency and accountability across teams

Control data quality and monitor model performance

Address ethical, legal, and societal impact requirements

Monitor and govern AI systems across their lifecycle

Source: www.iso.org

MITRE ATLAS

MITRE ATLAS focuses on adversarial threats against AI systems, extending traditional threat modeling to account for how machine learning models can be manipulated, degraded, or exploited.

In practice, it helps organizations:

- Identify AI-specific attack techniques such as data poisoning, prompt injection, evasion, and model extraction

- Map adversarial behaviors across the AI lifecycle, from data collection and training to inference and deployment

- Analyze threat paths that exploit model behavior rather than software vulnerabilities

- Design defensive controls aligned to real-world attacker tactics, not hypothetical risks

- Integrate AI threats into security operations alongside traditional cyber threat models

MITRE ATLAS does not replace AI risk assessment frameworks. It complements them by providing tactical threat intelligence required to secure AI systems against deliberate misuse and attack.

Source: atlas.mitre.org

Together, MITRE ATLAS and the OWASP LLM Top 10 guide adversarial testing, threat modeling, and runtime defense design, shaping security test cases, guardrail coverage, and abuse detection for AI systems in production.

The "SAFE-AI" Framework Alignment

SAFE-AI is designed to help organizations secure AI-enabled systems by identifying and addressing AI-specific threats and concerns systematically, not by treating AI like traditional software.

Here is what SAFE addresses:

- AI-specific threat expansion: Adversarial inputs, poisoning, bias exploitation, decision manipulation, and data leakage.

Supply chain vulnerabilities: Risk from third-party models, datasets, and tools with unclear provenance.

Assessment-grade control alignment: AI threats mapped to security controls, such as NIST SP 800-53, with testable controls, defined verification steps, and auditable evidence across pre-production and runtime.

Source: atlas.mitre.org

OWASP LLM Top 10

OWASP LLM Top 10 complements AI risk assessment frameworks by providing a standardized taxonomy of LLM-specific security risks that must be evaluated within broader governance and risk management structures.

LLMs introduced an entirely new attack surface. Prompt injection, data leakage, insecure output handling, and overprivileged agents are not edge cases. They are default failure modes.

Here is how OWASP LLM Top 10 becomes valuable in an AI risk assessment framework.

- Captures critical LLM vulnerabilities (different from general AI)

Provides practical defenses for each

Enables systematic risk assessment (map these risks for your LLM application)

Essential if your organization uses or plans to use LLMs

Free guidance from the trusted OWASP organization

Frameworks vs Capability Layers

AI risk assessment frameworks define governance and decision logic. Risk reduction happens only when those requirements are enforced through concrete technical capabilities.

In practice, frameworks map to the following implementation layers across the AI stack:

- AI Governance: Policy definition, risk ownership, approval workflows, and escalation enforced across teams.

- AI Security Posture Management (AI-SPM): AI asset discovery, inventory, configuration visibility, and misconfiguration-driven risk detection.

- Information Governance: Data access controls, retention enforcement, lineage tracking, and oversharing prevention across training and inference.

- AI Runtime Defense: Inference guardrails, prompt and response filtering, anomaly detection, PII redaction, and real-time blocking.

- AI Usage Control: Governance of embedded and external AI usage, including shadow AI detection and policy enforcement.

- AI Security Testing: Continuous offensive testing for prompt injection, jailbreaks, model inversion, data leakage, and abuse patterns.

- AI Supply Chain and AI-BOM: Provenance and integrity tracking for models, datasets, tools, and third-party dependencies.

Frameworks define intent. Capability layers determine whether that intent survives contact with production.

While frameworks provide structure, effective AI risk assessment depends on how they are applied in practice. Strategy, prioritization, and operational discipline determine whether these frameworks enable scale or become documentation overhead.

In our upcoming webinar, we focus on practical AI risk assessment strategies and best practices drawn from real deployments, showing how organizations translate frameworks into repeatable, defensible risk decisions.

The Bottom Line: Why Leaders Need This

- Risk is manageable with structure: Framework turns vague "AI is risky" into concrete, actionable processes

- Scale without chaos: Framework enables organization to deploy 100+ models confidently, not 5 models carefully

- Regulatory defense: Documented process + governance records = defensible position if regulators investigate

- Competitive advantage: Organization with governance moves faster (clear approval), safer (catches issues early), and more trustworthy (can prove it)

- Accountability: Clear roles, decision records, and ownership prevent finger-pointing when issues arise

Other Popular Articles

In the digital age, businesses must adopt an ad

GRC is the capability, or integrated collection